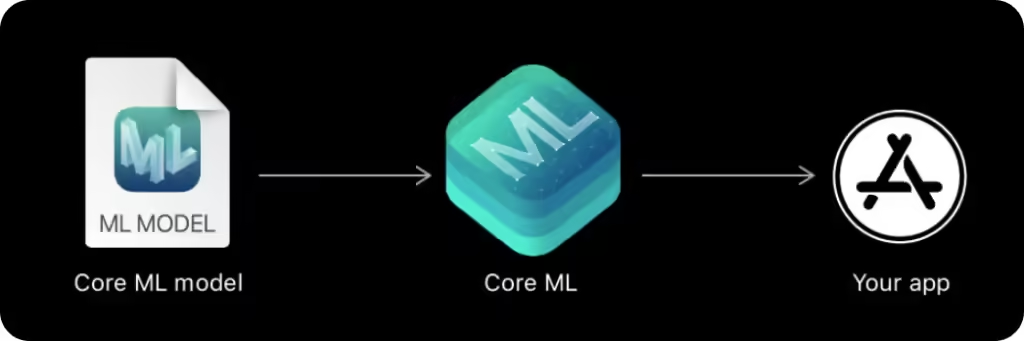

Machine learning is becoming a cornerstone in modern app development, and with the release of Core ML 4.0, Apple has made it easier than ever to bring powerful machine learning models directly into iOS apps. Whether it’s personalized recommendations, real-time object detection, or natural language processing, Core ML 4.0 enables developers to integrate advanced AI capabilities into their apps, creating more engaging, intelligent, and responsive user experiences.

In this post, we’ll explore how Core ML 4.0 is transforming iOS development in 2024, its new features, and best practices for integrating it into your apps.

What’s New in Core ML 4.0?

Core ML 4.0 introduces a series of game-changing updates that make it easier for developers to work with machine learning on iOS. Some of the most notable features include on-device model updates, better support for more complex models, and integration with VisionOS for AR applications.

1. On-Device Model Updates

One of the standout features of Core ML 4.0 is the ability to update machine learning models directly on the user’s device without needing an app update. This is a huge advantage for apps that rely on personalization or need to constantly refine their models. By updating models on-device, apps can provide more real-time, personalized experiences, such as improving predictive text, adjusting recommendations, or refining image recognition.

2. Support for More Complex Models

Core ML 4.0 has enhanced support for larger and more complex models, including deep learning models that require significant computational power. With Metal Performance Shaders (MPS) and neural engine optimization, iOS devices can now handle state-of-the-art AI models like never before. This opens up opportunities for more sophisticated applications, such as advanced medical imaging, real-time translation, and highly accurate object detection.

3. VisionOS Integration

With the release of VisionOS, Core ML 4.0 is now tightly integrated into AR and VR applications, enabling developers to create immersive, AI-powered experiences. From recognizing objects in augmented reality to controlling digital environments with gestures, Core ML 4.0’s integration with VisionOS is paving the way for next-gen AR experiences.

Why Core ML 4.0 is a Game-Changer for iOS Apps

Core ML 4.0 brings several key advantages to iOS development, particularly in terms of performance, security, and efficiency. Developers can now build apps that provide smarter features while still maintaining a high level of security and privacy, thanks to on-device processing.

1. Enhanced User Experience

Apps that use Core ML 4.0 can offer real-time insights and personalized interactions, enhancing the overall user experience. For example, an e-commerce app can analyze shopping behavior and offer tailored product suggestions, while a fitness app can provide real-time feedback on user movements and suggest improvements.

• Example: A personal assistant app can analyze user patterns, predict calendar events, and provide reminders based on learned user behavior, all while operating in real-time and without needing an internet connection.

2. Data Privacy

With the growing focus on data privacy, Core ML 4.0 ensures that all machine learning processing happens on-device, meaning user data never has to leave the device. This gives developers the ability to implement AI features while adhering to stringent privacy guidelines, offering trustworthy and privacy-compliant experiences.

3. Performance Optimization

Core ML 4.0 is optimized for Apple’s A-series processors and the neural engine, meaning it can handle intensive machine learning tasks while maintaining high performance. This allows developers to create apps with AI features that are both fast and responsive.

Best Practices for Integrating Core ML 4.0 into iOS Apps

To make the most of Core ML 4.0, it’s crucial to follow best practices that ensure your app runs smoothly and provides the best possible user experience.

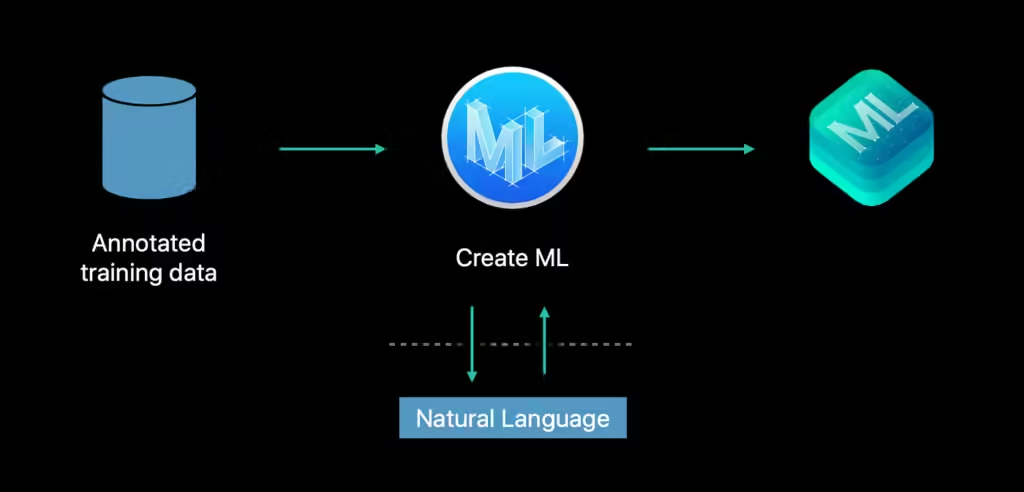

1. Start with Pre-Trained Models

If you’re new to machine learning, start by using Apple’s pre-trained models. These models cover common use cases like image classification, object detection, and sentiment analysis. By using pre-trained models, you can integrate machine learning features quickly and efficiently without having to train models from scratch.

• Pro tip: Use models like MobileNetV2 for image recognition or Sentiment Polarity for analyzing user reviews. You can customize these models later as your app evolves.

2. Optimize for On-Device Processing

One of the key advantages of Core ML 4.0 is its ability to run models on-device. However, it’s important to optimize your models to ensure they run efficiently on iPhones and iPads. Make sure your models are small enough to avoid performance slowdowns, and use quantization techniques to reduce model size without sacrificing accuracy.

3. Leverage Model Personalization

Core ML 4.0 allows you to personalize models on a per-user basis. For apps that need to adapt to individual user behavior, like health or fitness tracking apps, this is a powerful feature. By allowing models to learn from user interactions, you can deliver tailored experiences that grow smarter over time.

• Example: A health app that tracks running performance can update its prediction model based on the user’s progress, offering more accurate insights into future workouts.

4. Monitor Model Performance

It’s essential to continuously monitor your models to ensure they perform well in real-world conditions. Regular testing with real data and user feedback will help refine your models and improve their accuracy. Core ML 4.0 provides tools to assess performance on-device, making it easier to spot issues before they affect users.

5. Incorporate AR with VisionOS

For developers working with AR applications, integrating Core ML 4.0 with VisionOS is a must in 2024. Whether it’s recognizing objects in real-time or overlaying machine-learning-driven insights into the user’s field of view, the combination of VisionOS and Core ML can create incredibly immersive AR experiences.

• Pro tip: Combine Core ML’s object recognition with VisionOS to create apps that assist users with tasks like home design, real-time translation, or navigation in unfamiliar environments.

Real-World Use Cases for Core ML 4.0

1. Health and Fitness Apps

Fitness apps are taking full advantage of Core ML 4.0 by using on-device machine learning to track user performance, offer real-time coaching, and suggest improvements. By analyzing user data directly on the device, these apps maintain high performance and safeguard user privacy.

2. Retail and E-Commerce Apps

Retail apps can leverage Core ML 4.0 for personalized recommendations, virtual try-ons, and visual search. By analyzing customer behavior, these apps can offer more targeted suggestions and create engaging shopping experiences.

• Example: An app could analyze a user’s browsing habits and provide custom product recommendations based on past purchases or visual searches powered by Core ML.

3. AR-Enhanced Apps

AR apps in 2024 are benefiting from Core ML’s integration with VisionOS. For instance, apps that involve real-time object recognition and gesture-based controls are seeing a significant boost in performance and user engagement thanks to the improvements in Core ML.

Conclusion

Core ML 4.0 is revolutionizing the way machine learning is integrated into iOS apps. With its enhanced support for on-device learning, larger models, and integration with VisionOS, developers in 2024 have a powerful tool to create smarter, faster, and more responsive apps. By following best practices, such as optimizing for on-device processing and leveraging model personalization, you can create innovative and intelligent iOS apps that stand out in today’s competitive market.

Internal Links:

• iOS 18 Preview: What to Expect and How to Prepare Your Apps

[…] • Check out our guide to on-device learning in Core ML 4.0. […]