As machine learning (ML) becomes an essential part of modern app development, Apple’s Core ML framework continues to evolve, enabling developers to bring cutting-edge ML models to their iOS apps. With the introduction of Core ML 4.0 in iOS 18, Apple has provided powerful tools that allow developers to optimize and integrate machine learning models more seamlessly than ever. In this post, we’ll explore the new features of Core ML 4.0, how it enhances ML capabilities, and what this means for building smarter, faster iOS apps.

The Evolution of Core ML

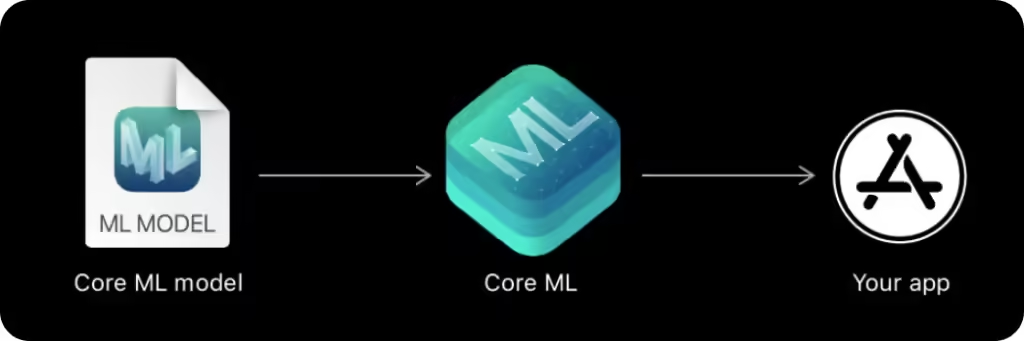

Since its debut in iOS 11, Core ML has allowed developers to run machine learning models locally on iOS devices, empowering apps with features like image recognition, natural language processing, and more. The goal has always been to make it easier for developers to integrate ML models without requiring extensive ML expertise. Over the years, Core ML has seen numerous updates, each iteration refining its performance, expanding model support, and offering more flexibility for developers.

With iOS 18, Core ML 4.0 introduces a suite of enhancements designed to maximize the efficiency and performance of on-device machine learning models. Let’s dive into the most impactful features and see how you can use them to optimize your ML-powered apps.

Key Features of Core ML 4.0

1. On-Device Model Personalization

One of the standout features in Core ML 4.0 is on-device model personalization. This allows models to learn and improve over time based on individual user interactions—without compromising privacy. For instance, if you’re building a fitness app that uses machine learning to analyze running patterns, Core ML 4.0 can fine-tune the model directly on the user’s device. This ensures personalized and improved predictions, while all user data remains local, complying with privacy standards.

By leveraging on-device learning, apps can provide more tailored experiences that adapt to individual user preferences, all while keeping the training data secure on the device.

2. New Quantization Techniques for Faster Inference

With iOS 18, Core ML 4.0 introduces advanced quantization techniques that reduce the size of ML models without sacrificing accuracy. Smaller models mean faster inference times and lower memory usage, which translates to smoother performance, even on older devices. By applying quantization, developers can deploy more complex models that run efficiently across a wide range of iOS devices, from the latest iPhone to older versions still in use.

Whether you’re working on a photo-editing app or building real-time object detection, these new quantization options ensure your app can offer blazing-fast performance without draining device resources.

3. Support for Transformer Models

Core ML 4.0 also adds support for transformer models, one of the most powerful architectures in machine learning. Transformers are known for their superior performance in natural language processing (NLP) tasks, but they also excel in other domains such as image and sequence data. With Core ML 4.0, developers can now leverage pre-trained transformer models in their apps, unlocking new possibilities for applications like language translation, summarization, sentiment analysis, and more.

The inclusion of transformer models enhances the capabilities of apps dealing with text, making it easier to implement features such as real-time language translation or AI-powered text prediction.

4. Improved Multi-Task Learning

In previous iterations of Core ML, developers could use models trained for a single task. With multi-task learning in Core ML 4.0, it’s now possible to create models that can handle multiple tasks at once. For instance, an app can use a single model to both identify objects in an image and recognize the text within the same image. This drastically reduces the need for multiple models running simultaneously, which improves efficiency and decreases the computational load on devices.

By combining different tasks into one model, developers can streamline app performance, cut down on memory usage, and simplify their ML pipelines.

5. Seamless Integration with Create ML 3.0

To further improve the developer experience, Core ML 4.0 works seamlessly with Create ML 3.0, Apple’s ML model training tool. Create ML 3.0 offers a streamlined workflow for training custom models using data such as images, video, or text—without requiring deep ML knowledge. Once a model is trained in Create ML 3.0, it can be easily integrated into your app using Core ML 4.0.

For developers working on apps that need custom machine learning models, the smooth interoperability between Create ML and Core ML means faster development cycles and more intuitive workflows.

How Core ML 4.0 Benefits iOS 18 App Development

The improvements in Core ML 4.0 are more than just technical advancements—they empower developers to create more intelligent, responsive, and efficient apps in the evolving iOS 18 ecosystem. Whether you’re building an app that relies on image processing, language understanding, or user personalization, Core ML 4.0 has something to offer.

Here are a few ways Core ML 4.0 can transform your iOS 18 development:

• Enhanced Personalization: With on-device learning, you can offer more personalized experiences that adapt over time, leading to better user satisfaction.

• Speed and Efficiency: Advanced quantization ensures your ML models run faster and use fewer resources, even on older devices.

• AI-Powered Text: Transformer model support opens the door to more sophisticated language-based features such as chatbots, translators, or smart assistants.

• Better Multi-Tasking: Multi-task learning allows apps to perform multiple functions with a single, more efficient model, cutting down on app size and complexity.

Best Practices for Optimizing ML Models with Core ML 4.0

To make the most of Core ML 4.0, consider the following best practices for optimizing your machine learning models in iOS 18:

1. Quantize Your Models: Use the new quantization techniques to reduce model size and improve inference speed. Test different levels of quantization to find the best balance between performance and accuracy.

2. Leverage On-Device Learning: Implement on-device personalization to improve the user experience without compromising privacy. Make sure to provide users with the option to reset learned data if needed.

3. Use Pre-Trained Models When Possible: Instead of training your own models from scratch, take advantage of existing pre-trained models, especially transformers, to save time and resources.

4. Monitor Performance: Continuously test your app’s ML performance across different devices to ensure smooth operation. Use Instruments to monitor memory usage and optimize as needed.

5. Stay Updated with Create ML: Keep an eye on updates to Create ML, as it offers new tools and datasets for training custom models that can easily integrate with Core ML.

Conclusion

Core ML 4.0 is a game-changer for machine learning on iOS, providing the tools and optimizations needed to bring smarter and faster ML-powered apps to life. With new features like on-device personalization, transformer model support, and multi-task learning, Core ML 4.0 empowers developers to build more intelligent apps that offer better performance and user experiences.

As iOS 18 continues to push the boundaries of what’s possible, Core ML 4.0 ensures that machine learning remains a cornerstone of innovation in the Apple ecosystem. Whether you’re looking to enhance your app’s AI capabilities or optimize its performance, Core ML 4.0 gives you the flexibility and power to achieve your goals.

Internal Links:

• Learn more about Core ML optimization tips.

• Check out our guide to on-device learning in Core ML 4.0.

External Links:

• Explore the official Core ML documentation from Apple.

• Learn about Apple’s Create ML 3.0.